Interpret is excited to announce that our new product line, inDev, is now available to our clients. Our internal team of expert Game Evaluators has an extensive history of analyzing games in development and providing developers with actionable feedback to improve their games. We’re very excited to have this team on board and we’ve interviewed Matt Diener, who leads the product line, to answer some key questions about inDev and his team.

Please check out more information, including free examples of each product on our inDev product page.

Interview

Q: How does one become a professional game evaluator? What experience is needed?

Most of us began as professional critics and game reviewers. The most important combination of skills is an understanding of game design trends, industry trends, and review landscape trends.

Q: What makes your approach unique?

While many Mock Reviews from freelancers will tell you what they personally think of a game, our approach tells you how the game will perform in aggregate: what critics and reviews are likely to call out as positives and negatives in addition to the score it’s likely to receive. Our comprehensive grasp of the market and review landscape, along with our experience as critics, gives us a unique perspective.

Q: How many games have you and your team evaluated?

We’ve reviewed well over 500 titles on every platform (PC, console, VR, mobile, dedicated gaming handhelds, etc.) and across all genres and subgenres, including 80+ hour single-player action RPGs, MMORPGs, Match-3 puzzle games, and VR shooters. We’re experienced at all stages of development, from gray box / pre-alpha to gold master, so we’re comfortable providing forward-looking feedback on WIP builds without being distracted by elements consumers rarely see.

Q: How big is your team?

Currently, we are a team of five core reviewers within a much larger team of 85 analysts devoted to games and technology research. And, we are growing fast. We are actively mentoring newcomers who train for three years before they’re allowed to take on lead analyst roles for projects.

Q: How long does it take to conduct a Game Evaluation or a Mock Review?

A lot depends on the playable length of the game, but we typically deliver reports within 5-8 business days, with the lead analyst focusing 100% of their working time on an individual game until the report is completed.

Q: Why would someone need to use outside feedback? Can’t game developers do it themselves?

We provide a fresh, objective perspective. Our feedback as a third party is free of the biases that may color internal reviews. Given our broad experience base, we’re particularly adept at providing competitive feedback on game feel, messaging, input mapping, and game economies, to name a few.

Q: What is the biggest difference between the evaluation you give vs. a standard consumer playtest?

Our Game Evaluations and Mock Reviews go deeper than consumer playtests – and neither is meant as a replacement for the other.

Our reports are designed with game development teams in mind. Whereas a consumer playtest will tell you what typical players in your primary audience might say about the game and what frustrations they run into, a Game Evaluation or Mock Review from Interpret involves us playing your available game build from start to finish and analyzing fine details of the game’s features and supporting documentation to provide meaningful suggestions for development improvement. We thoroughly examine the underpinnings of a game’s design (its core loops, “three C’s”, and core systems) to point out concerns and describe what can be done to improve its overall performance.

Questions That Sneak In Some Beneficial Points To Drive Sales

Q: Since you evaluate games in development, how do you overlook bugs, crashes, and glitches, or do you?

We meet with clients to set these expectations when we begin each project. We generally ignore minor bugs and glitches and do not factor them into our analysis unless the game is at or rapidly approaching release. If we notice a particularly jarring or major bug, we will check with the client to see if it’s a known issue before making note of it in the report. If a game is very close to release and we see a fair number of bugs, we will call these out to the client before putting them in the report.

Developers are usually aware of most bugs with builds, so we focus attention on more profound holistic areas where the game can improve.

Q: How accurate has your team been at predicting Metascores?

90% of our score predictions fall within plus or minus three points of the score that a game receives when it releases. We conduct yearly post-mortems to reflect on our hits and misses, and to keep our accuracy in-line with our messaging to clients.

Q: What sorts of actions have clients taken to improve their games as a direct result of your input?

In earlier stages, our recommendations have helped clients completely shift the direction of their games, such as changing a licensed game concept to an original IP, switching the art style, adding narrative elements, and more. Even when clients are only a few months out from their game’s release, they have leveraged our feedback to improve aspects such as balancing certain stages/encounters and fine-tuning the first-time user experience.

Q: What is the difference between a Concept Evaluation, Game Evaluation, and Mock Review?

Concept Evaluations are best done very early in the game’s development – often at or before greenlight – to inform go/no go decisions and/or potentially make fundamental changes to the game’s design.

Game Evaluations are development-focused reports and are best employed when there is a vertical slice or playable build available. These reports examine graphics, sound design, input mapping, genre expectations, design innovation, player agency, performance, and more to provide recommendations to improve the game’s quality.

Mock Reviews are leveraged when the game is at or near gold master, and they are meant for marketing, PR, and development teams. These reports provide a comprehensive look at what critics from different regions (Europe, North America, Asia, etc.) are likely to say about the game and how it can be better positioned and messaged to improve its reaction.

Q: How do you handle games with multiplayer components?

In addition to clients playing with us directly, we also leverage other Interpret analysts for large multiplayer sessions. All analysts play alongside the analyst leading the project and provide their play session notes for the project lead to factor into their reports.

Q: How many hours do you engage with a game for your evaluation?

We typically spend a minimum of 8 hours playing/replaying even the shortest vertical slices, and we spend upwards of 60 hours with longer game builds.

Light-Hearted Questions

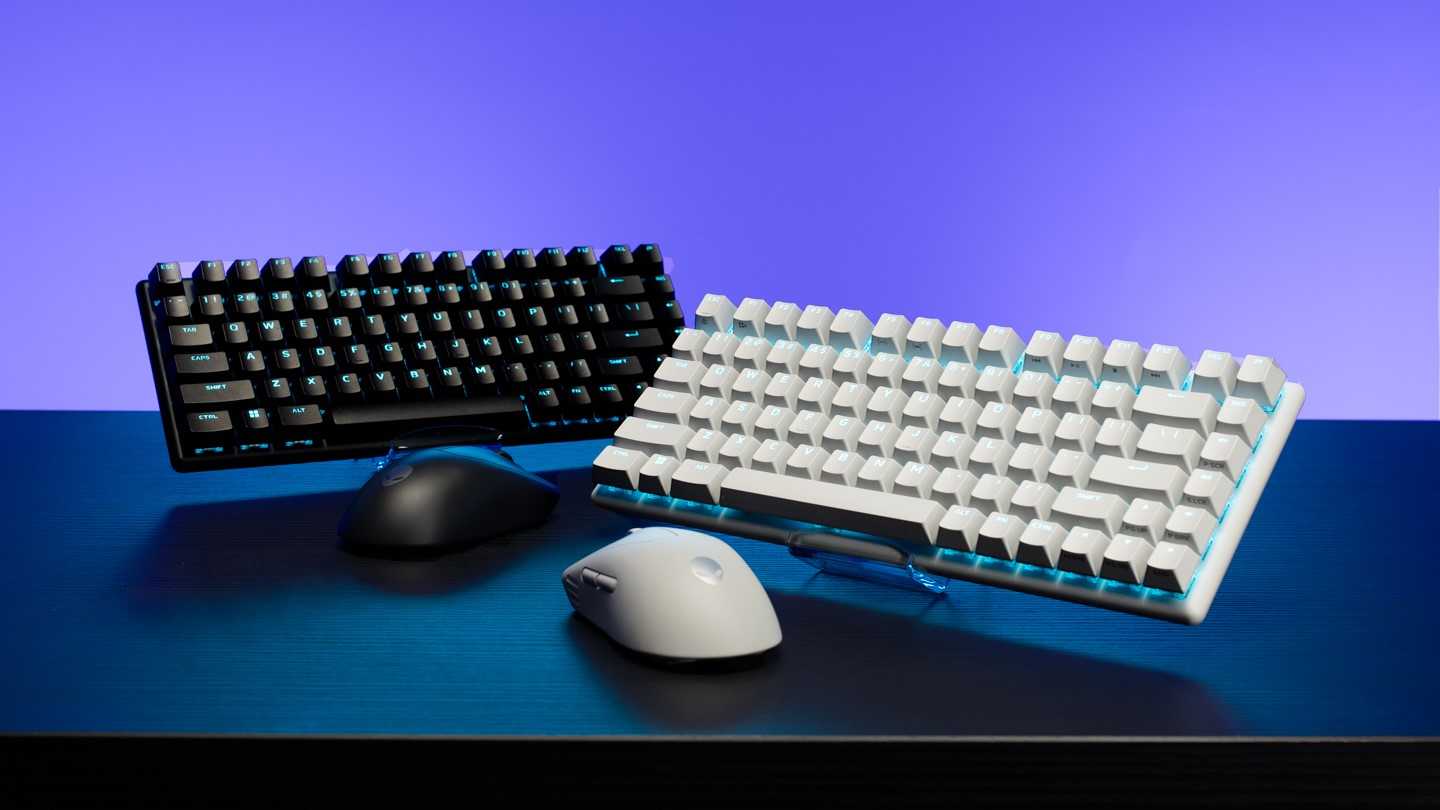

Q: What does your PC rig setup look like to play games that are in development and sometimes 4 years away from release?

We are equipped with top-tier gaming PCs. Since even a narrative game can place a heavy burden on PCs in an unoptimized state, we find it’s better to be way over-spec’d. We upgrade our gaming setups at least once a year.

Q: What is your favorite part of the evaluation process?

We love helping clients answer questions they’re struggling with and hearing their excitement when we make suggestions they hadn’t considered. Many of our clients eat, breathe, and live the games they’re working on and might miss broader industry trends from outside of their genre or platform. We bring our market-level perspective and expertise to their games, and we often spark ideas or validate concerns teams might have been harboring but needed to hear from another source.